Hallway

In 2020 I posted a tweet about a web-based facial mocap prototype. That tweet caught the attention of a professor at Georgia Tech and turned into a master’s thesis. That thesis connected me with the team at Hallway and turned into a full time job.

Hallway aims to democratize 3D characters as a creative tool. We’re a startup backed by investors such as Sequia Capital and Google’s Gradient Ventures among others.

My contributions can be divided into the following stages:

JavaScript SDK

When I joined the company, Hallway consisted of two products:

- Hallway Tile - MacOS virtual camera app

- AvatarWebkit - JavaScript motion capture SDK

My focus was improving performance of the JavaScript SDK. The SDK takes video from a regular webcam and outputs blendshapes and transforms for animating a 3D character. My main contributions to improve performance were:

- Rewriting our prediction pipeline in C++ compiled to WASM

- Offloading execution into a web worker to unblock the main thread

This resulted in over 4x improvement to rendering speed (video shows before/after).

Aside from including FPS counters in each corner, this compressed video can’t truly communicate page performance. Try the demo yourself, ideally on a high refresh display.

Predictions are still constrained by the frequency of your webcam, but the smooth interpolation enabled by unblocking rendering makes the whole experience feel like butter.

Technologies used for this refactor include TFLite, OpenCV and Emscripten.

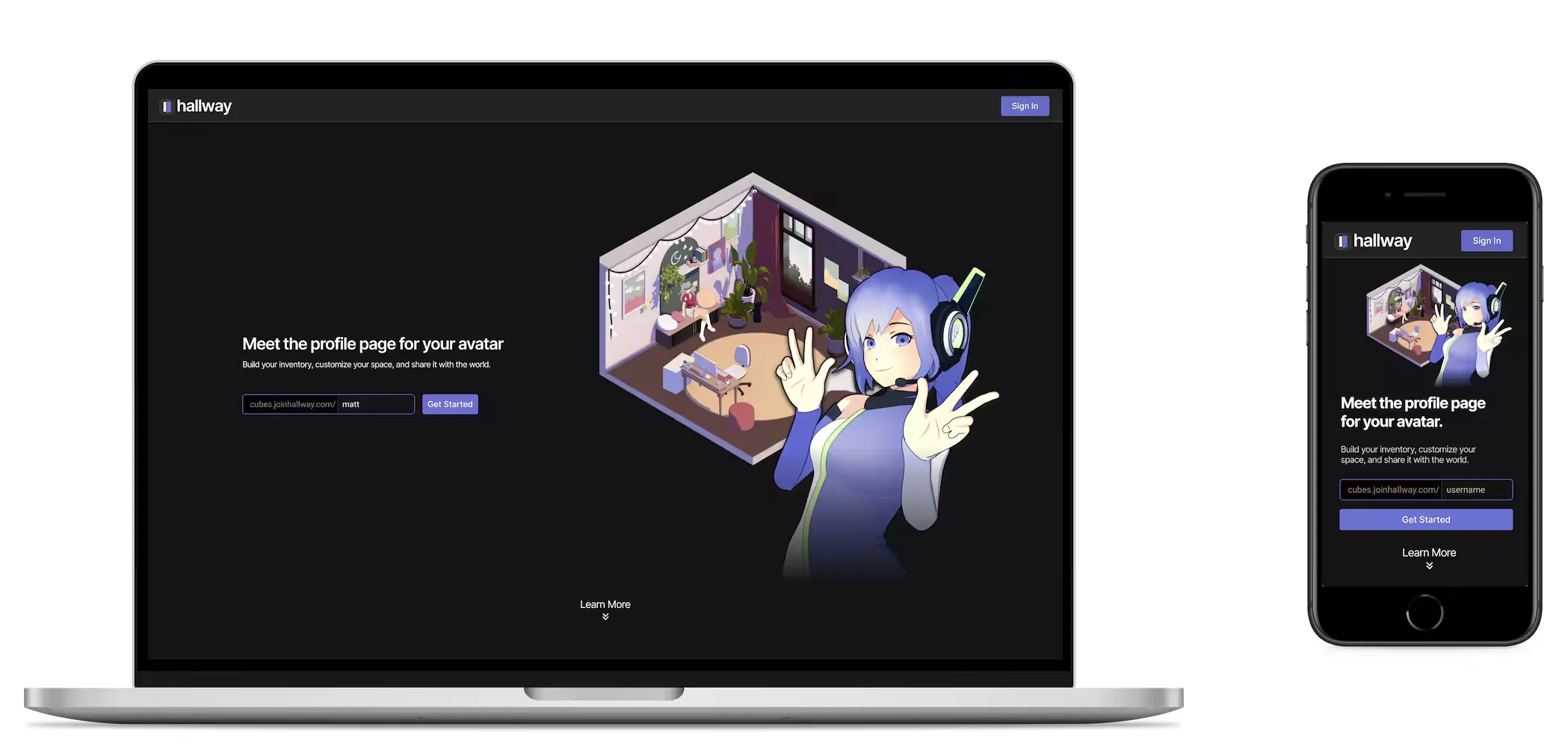

Hallway Cubes

At the start of 2023, Hallway grew from a lower level motion capture tool into a consumer facing platform, aiming to be the destination for:

- Finding high quality avatars

- Embodying them with your existing hardware

- Connecting creators and fans through rich interaction

My responsibility was research, design, and development for the web part of this platform.

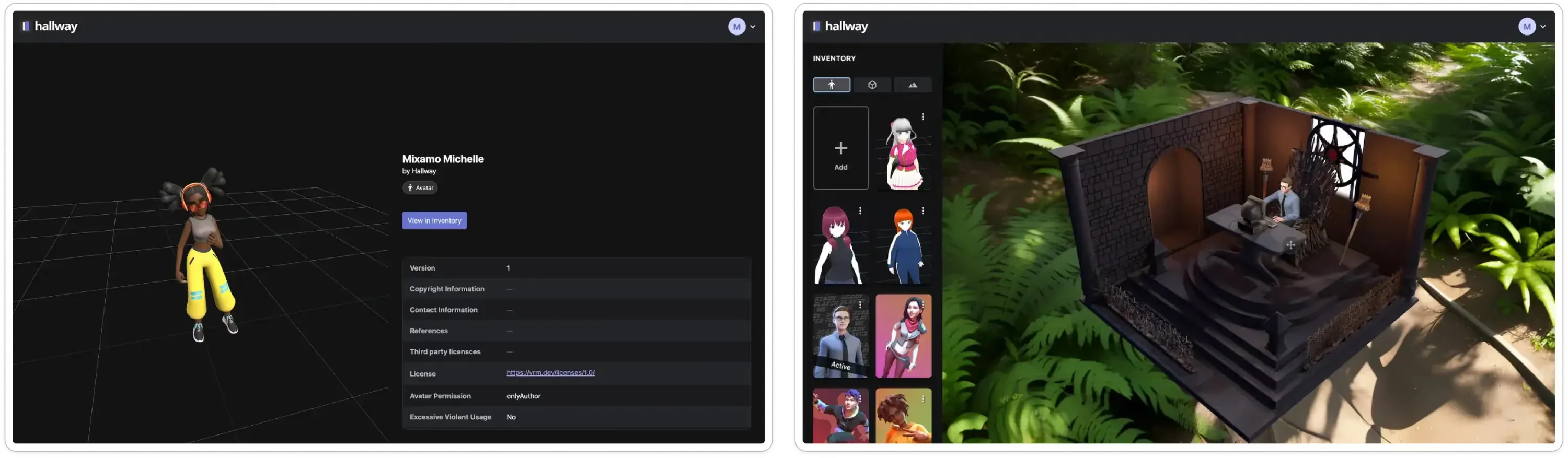

Upon sign up, users would given a “cube”, a sort of 3D profile page that showcases their character in an isometric environment accessible from a shareable URL. New avatars were available from our marketplace or could be imported from other services.

Users could then take control of this character using their webcam in our native desktop app, for use in meetings or streaming on social media.

Publishing high quality 3D content on the web poses a challenge for load speed. I used several compression techniques to shrink our VRM and glTF assets while preserving quality. This involved writing a hefty custom Extension in glTF-Transform to support VRM features.

Many of our assets saw a 10-30x size reduction.

Another technical achievement was our animation system, which uses forward and inverse kinematics to retarget a single animation file onto arbitrary characters, sometimes with wildly different proportions, while also allowing us keep certain body parts anchored to the environment.

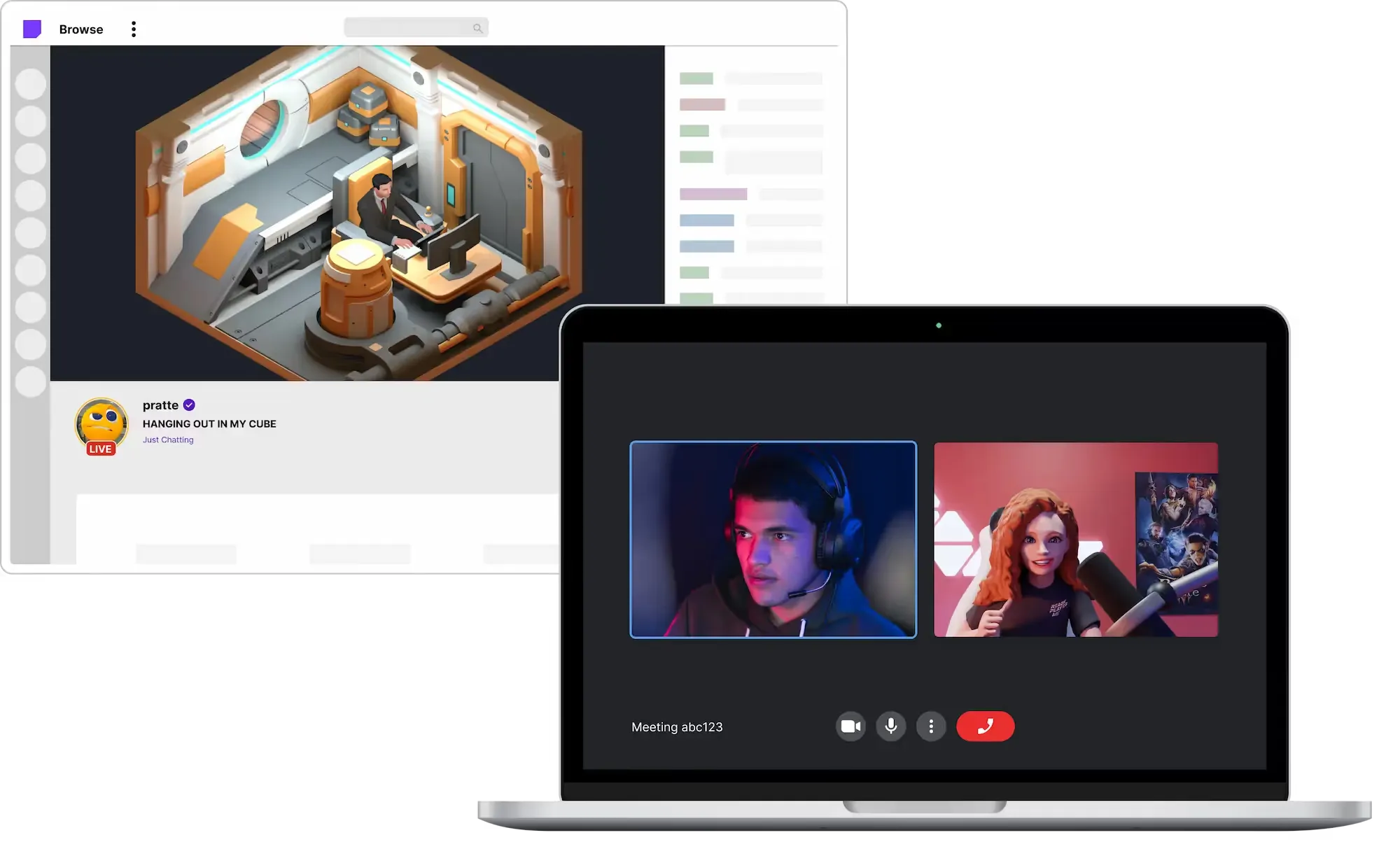

Hallway AI

Thusfar, motion capture had been the main way users could interact with their character. But with advancements in LLMs, new possibilities arose for creative control without the need for realtime input.

I began exploring how our main character “Hallway-chan” might be able to interact with fans automatically, through the chat interface from Twitch and TikTok.

The goal for this project wasn’t to replace the human behind the screen, but to empower them with more flexible tools. For instance, Hallway-chan remembers details about individual viewers that a streamer might otherwise struggle to keep track of. And when you’re feeling under the weather, it’s reassuring knowing you have a co-host to help keep the stream energized.

Reception to our test streams was positive, and we soon had several creators in the community reaching out asking for AI characters of their own.

One interesting side effect of building on web technology is that the app seamlessly integrates with OBS browser sources and custom docks.

The experience is synchronized with the public website so fans can tune in from the 2D stream on Twitch/TikTok or watch the 3D experience on our site. One-on-one chats with an AI sometimes feel lifeless; We prioritize many-to-one interactions to preserve the real human connections in these communities.

Characters do more than just talk—they also can take actions such as performing animations, playing videos on the TV, and showering the scene with emojis. I use a carefully orchestrated streaming pipeline to deliver generated speech and animations to clients as soon as possible.

Exposing actions and information about the scene to our agents allows streamers to “reprogram” the experience at runtime using natural language. For instance, some streamers have configured their character to award viewers TV priveledges for correctly answering trivia questions.

Looking forward, we’re arranging partnerships with larger brands and working on ways for streamers to further customize their character and its interactions.